Portfolio Inner Path

Program: MSc Virtual and Augmented Reality (Programming and Computer Science)

Student ID: 33628590

Name: Saurabhkumar Ramanbhai Parmar

Project Category: VR App

Team Members: 4

Duration: 3 months

Role: Programming, Development, Testing, Debugging, Project Management

Project Overview: "Inner Path"

"Inner Path" is a narrative-driven Cognitive Behavioural Therapy (CBT) experience with an integrated Virtual AI Mentor, delivered through Virtual Reality (VR). The project aims to provide an immersive therapeutic experience for individuals struggling with anxiety and depression, particularly those who may not have access to traditional therapy. Through our research, we identified significant barriers to accessing therapy — including high costs and long wait times for in-person appointments. Many individuals face extensive delays before they can begin treatment. "Inner Path" was developed to address this gap, offering a more accessible, engaging, and immersive therapeutic experience. By leveraging VR technology, users engage in a self-guided narrative therapy session, built upon professional consultation and CBT principles. The project creates an environment where users can ease anxiety, interact naturally, and receive guidance from a trained virtual AI mentor, helping to bridge the gap between professional therapy and immediate personal support.

Role and Responsibilities:

Saurabhkumar Parmar:

Programming, Development, Project Management, Scene ManagementFin:

3D Modeling, Asset Creation, Scene Management, Therapy Script HandlingLouis:

Therapy Script Development, Ethical Management of TherapyVardaan:

Sound DesignResearch and Related Materials:

Our research showed that while mobile and chatbot therapy platforms exist (such as WYSA, which is NHS-approved), no comprehensive VR solution oriented toward narrative CBT therapy was readily available. Other companies offer 360-degree video therapies or mobile apps; however, we found no platform integrating immersive VR with guided narrative therapy in a forest environment, supported by real-time AI interaction. This confirmed the uniqueness and relevance of Inner Path.

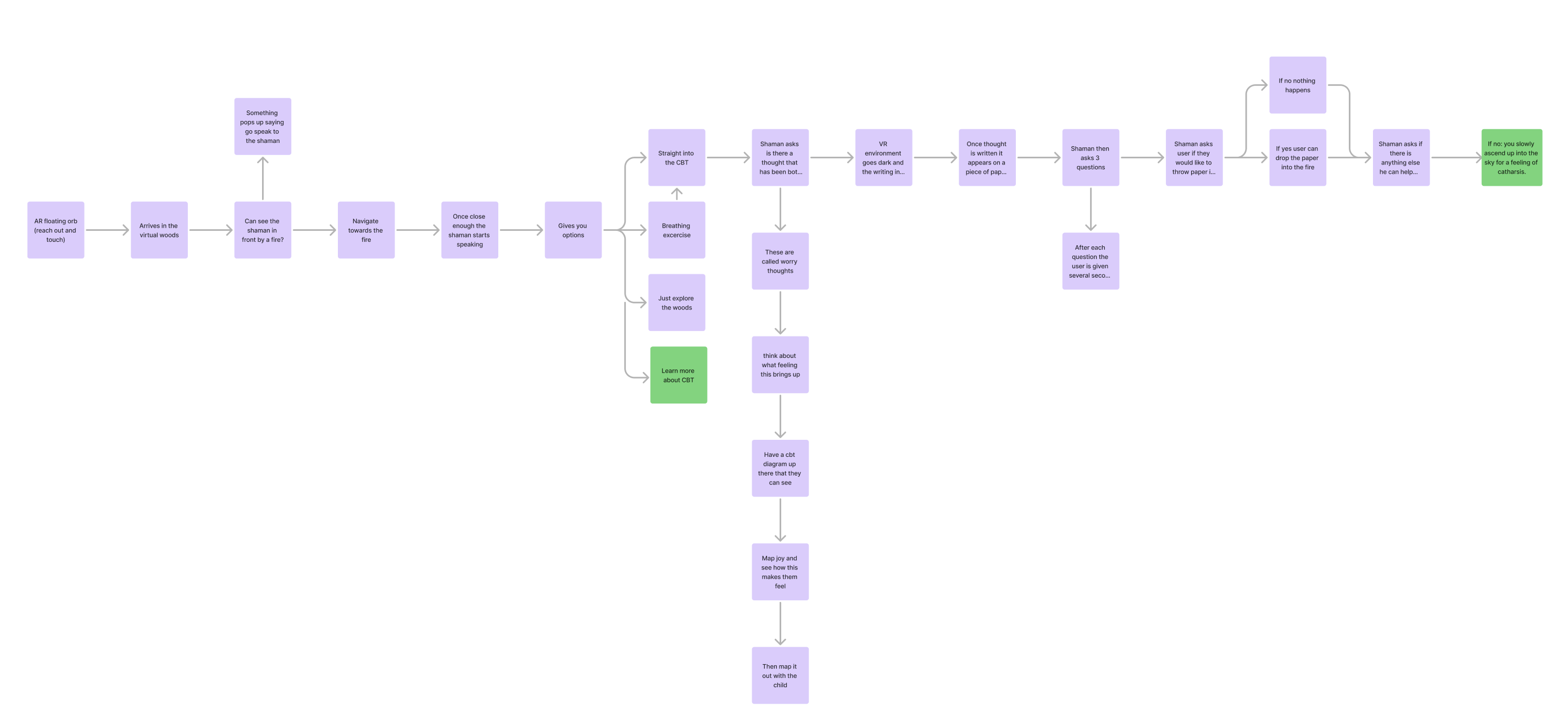

User Journey (UX Flow):

Upon launching Inner Path, users are transported to a calming forest environment where they follow a guided path towards the Virtual AI Mentor. Along the journey, users engage in multiple interactive points designed to support therapy goals, including:

- Breathing exercises

- Object interactions

- Fire-based breath control

- Conversations with the Virtual AI Mentor

- Writing final thoughts on an interactive board

The entire experience is designed to be intuitive, natural, and therapeutically beneficial.

Application Modules:

1. User Navigation

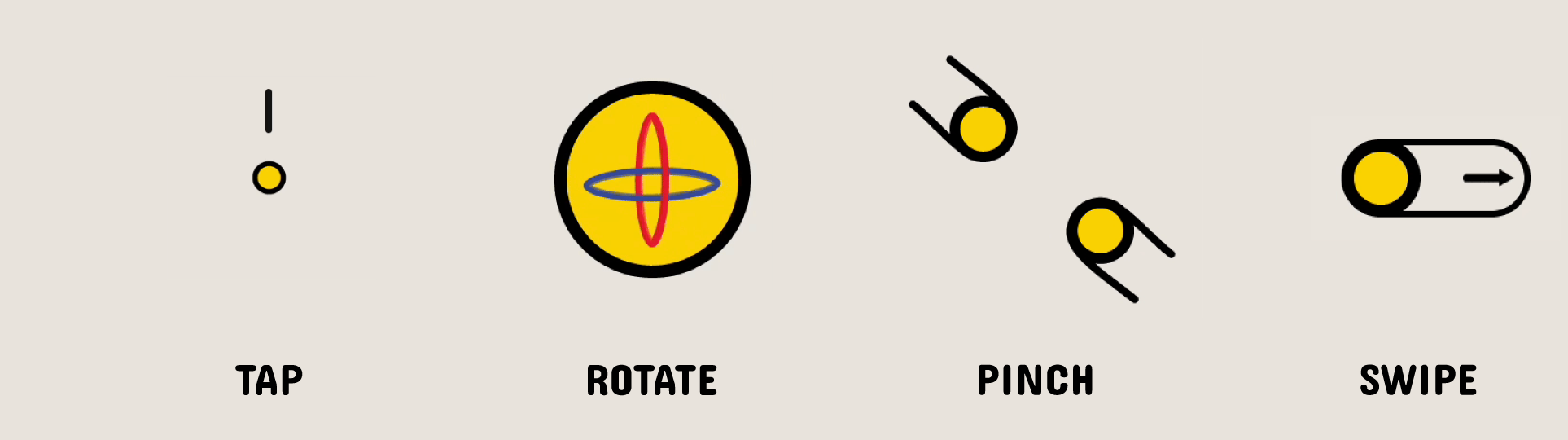

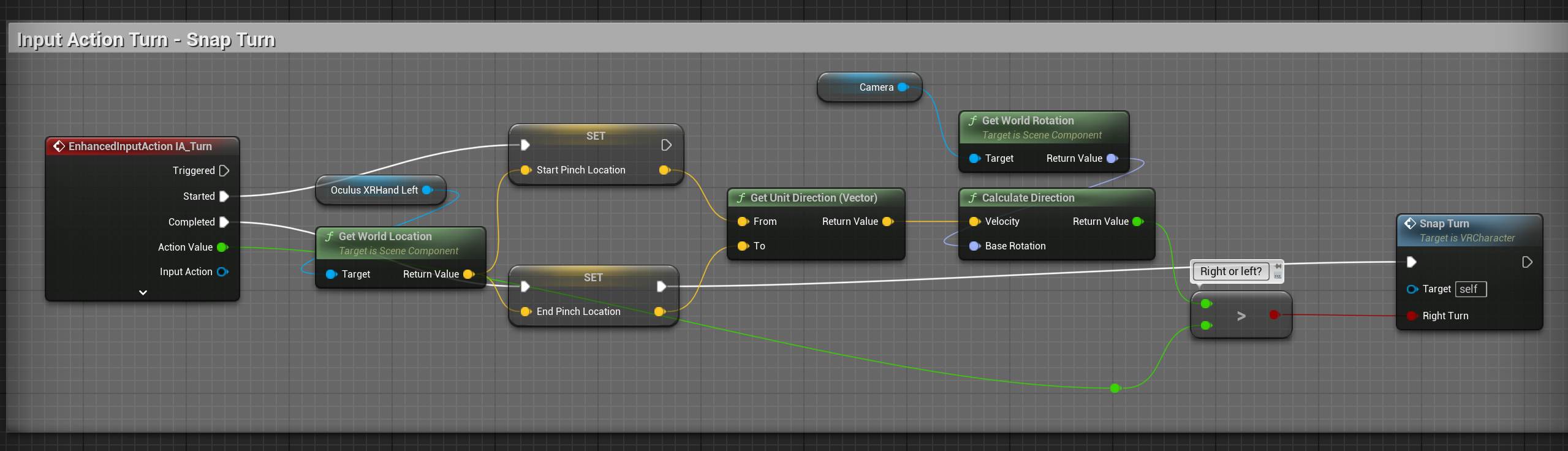

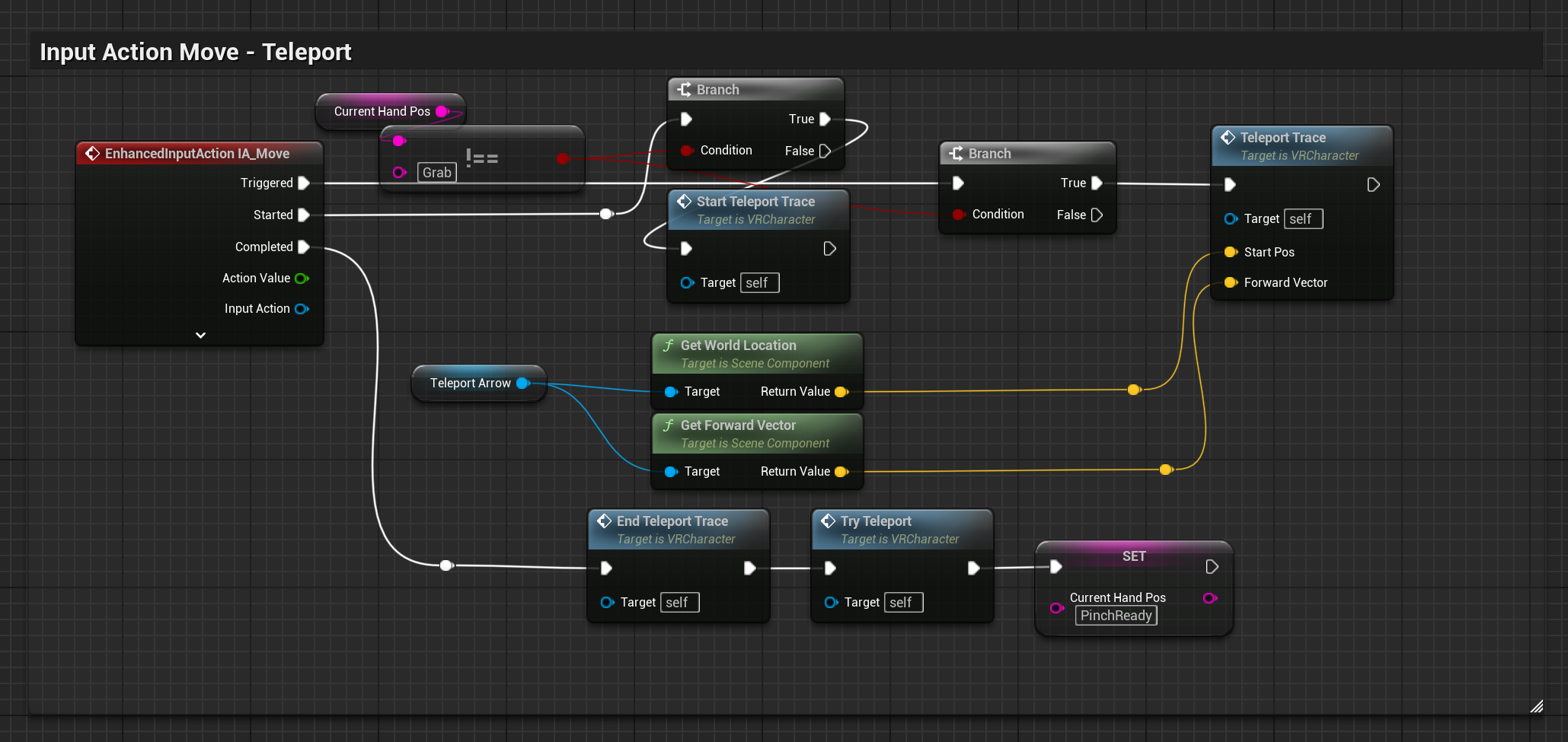

Users are introduced to VR navigation techniques via handcrafted wooden boards with simple illustrations and writing. Core navigation includes: Teleportation: Right-hand pinch gesture to locate and move to a new position. Rotation: Left-hand pinch and slide gesture to rotate the viewpoint. Each interaction stage is guided to ensure a seamless user experience.

2. Interactions:

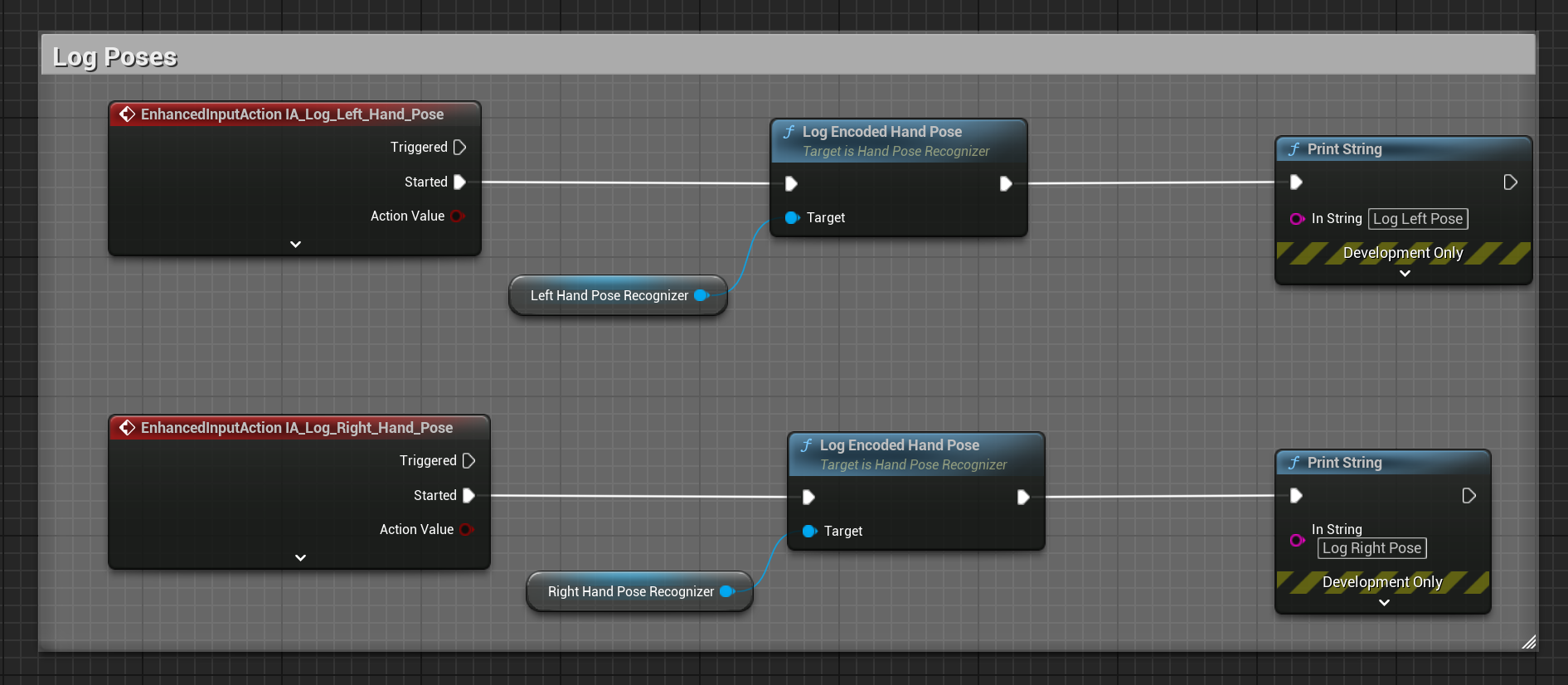

Key interactions enhance immersion: Teleportation: Pinch/release gestures to move. Object Interaction: Picking up and throwing bricks to simulate natural exploration (using palm detection and pinch gestures). Breathing Exercise: Engaging with a virtual fire that reacts to hand-driven breathing motions. Virtual Mentor Communication: Initiating and ending conversations with custom left and right-hand gestures. Drawing Board: Writing or drawing thoughts to conclude therapy sessions.

3. Breathing Exercise:

Users perform a breathing exercise by raising and lowering their hand, synchronized with a responsive fire animation. This activity was developed with professional advice to deepen the breathing experience and increase user engagement.

4. Talking with the Virtual AI Mentor:

Through simple thumbs-up gestures, users initiate a session with the AI mentor (powered by ConveaAI). The mentor provides cognitive therapy advice, making the experience more personalized and meaningful.

5. Writing Thoughts:

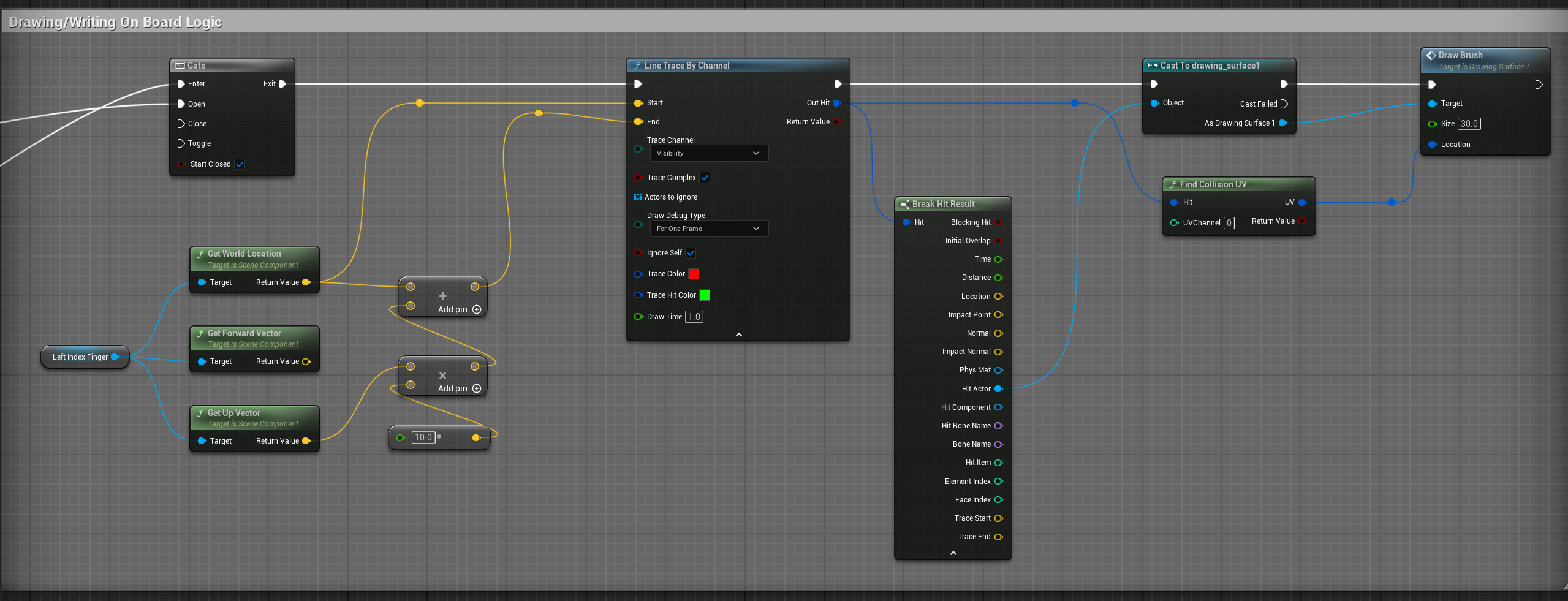

At the session’s end, users express their negative thoughts on a virtual drawing board using fingertip drawing mechanics, encouraging emotional release and reflection.

6. Sound Design:

Custom-designed spatial sound enhances immersion. Ambient forest noises, adaptive fire sounds, bird chirps, and flowing water all contribute to a serene, realistic environment.

Inner Path Technical Overview:

1. Technologies Used for App Development:

2. Some of the User Interactions with the App

User can lunch the "Inner Path" app on the Meta Quest 3 standalone VR headset. The app is designed to be intuitive and user-friendly, allowing users to navigate through the forest environment using hand gestures. This are few important gestures.

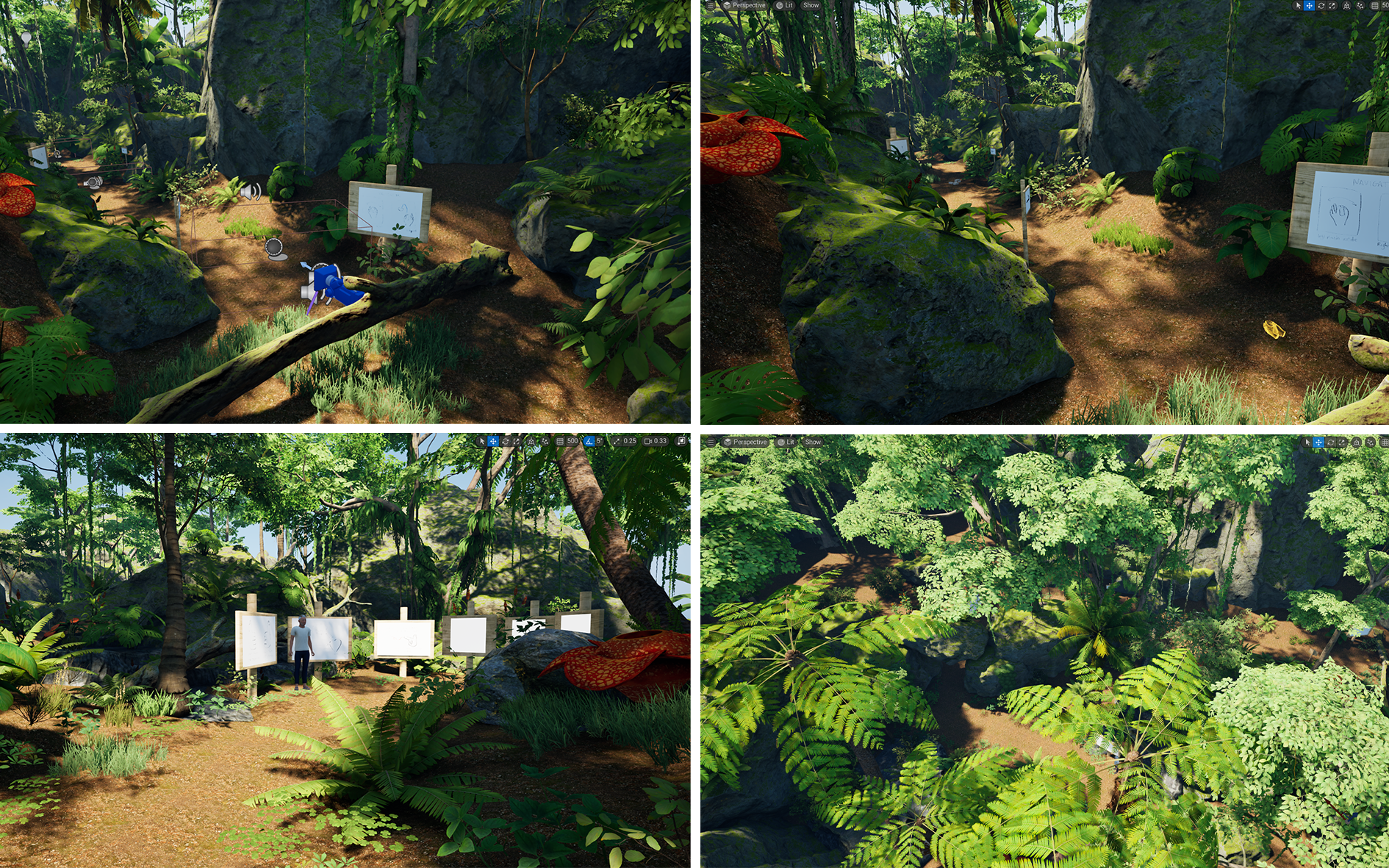

3D Assets and Scene Design:

While I supported asset creation, my primary responsibility was the forest scene setup in VR. I worked with Fin to create a cohesive, natural environment using 3D models like trees, grass, rocks, and flowers. Fin designed specific assets such as the drawing board and navigation signboards.

Scene testing demo in Meta Quest stand-alone app

UI Design:

UI elements, such as the visual designs for navigation and the drawing boards, were primarily handled by Fin. I contributed by integrating these assets seamlessly into the VR environment.

Sound Design:

Sound design was managed by Vardaan. The spatial audio system, including fire soundscapes and ambient natural sounds, was meticulously implemented to strengthen immersion and reinforce the therapy narrative.

Project Management:

Project management responsibilities were shared between myself and Fin. We established project timelines, assigned tasks, coordinated development stages, and ensured deliverables were met efficiently. Louis contributed to the scripting and narrative structure, ensuring ethical adherence and therapy accuracy.

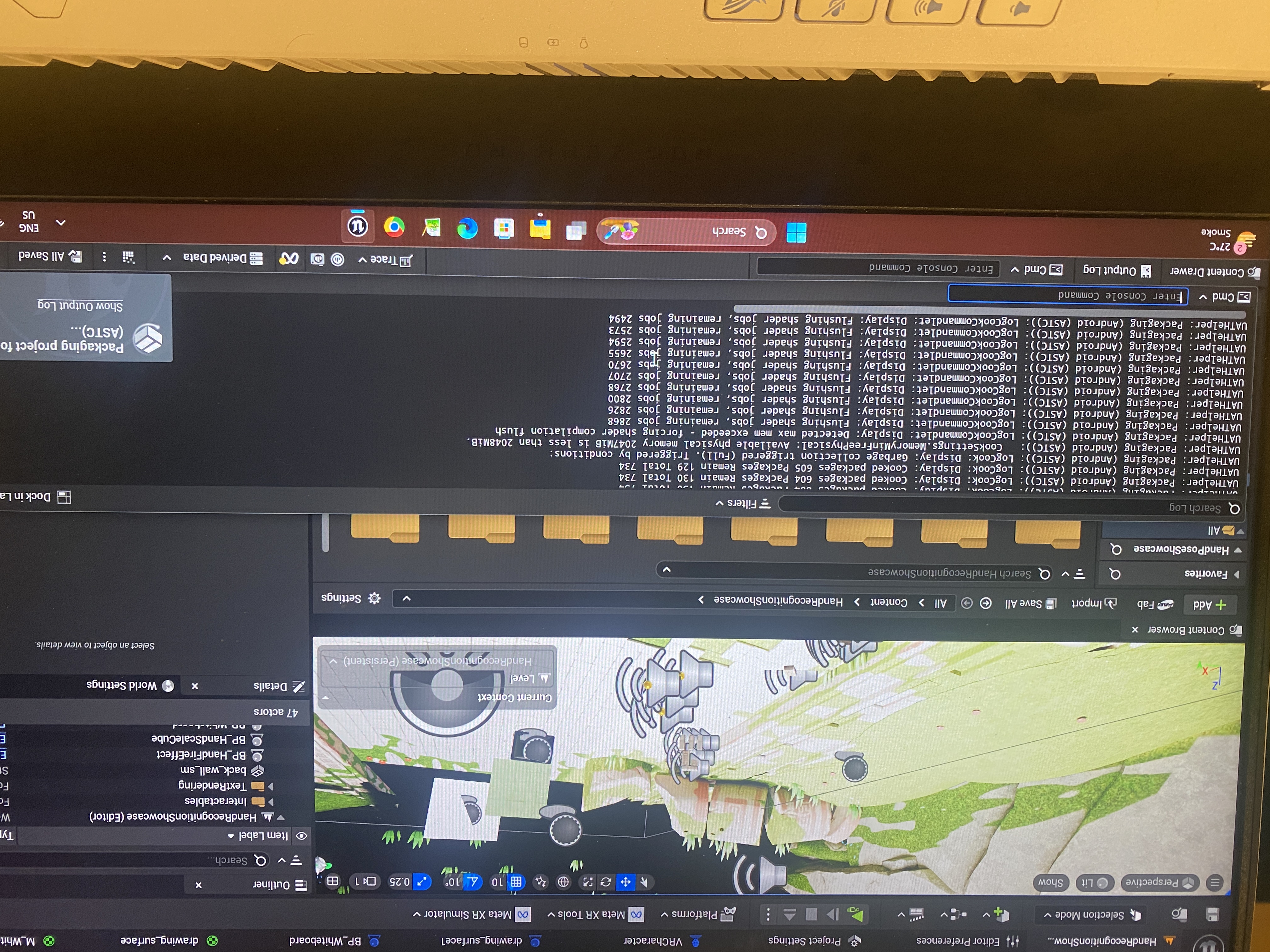

Scene Development:

Here, we are developing the scene manually in Unreal Engine 5. The forest scene is designed to be as realistic as possible, with over 500+ individual meshes placed manually to create a natural-looking environment.

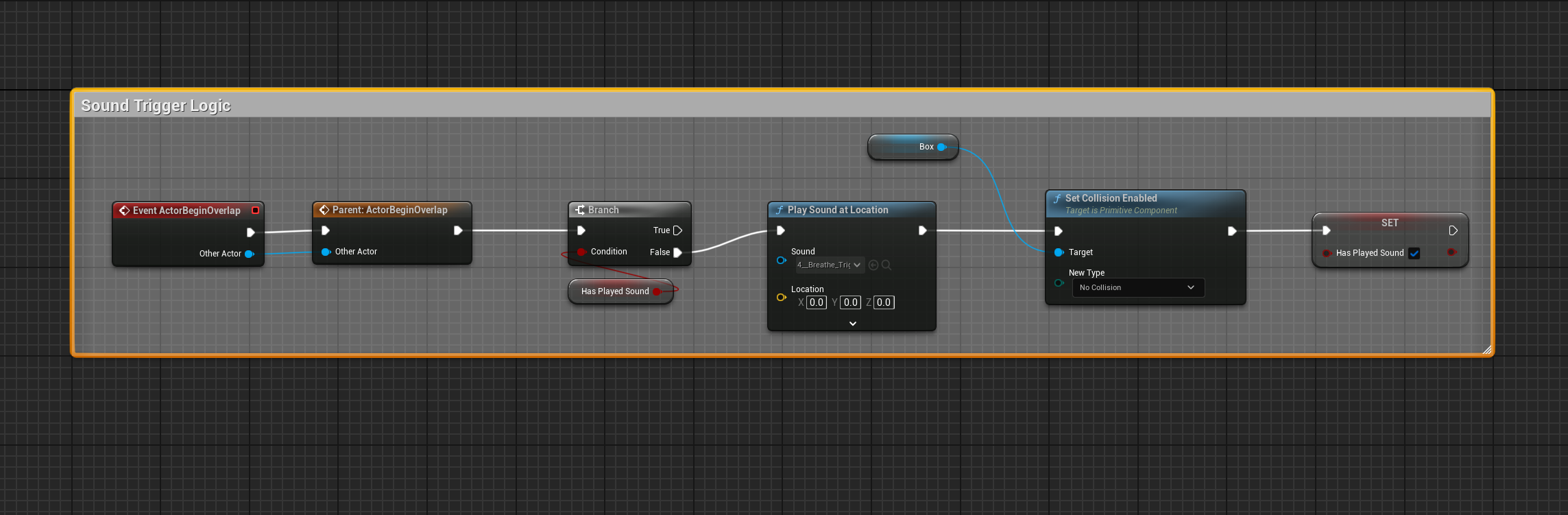

Development Stage: Blueprint Scripting

Here, I used the blueprint language to develop the entire app code. I used the Object Oriented Programming to make the code more scalable and maintainable for future development. Also, the naming of methods and variables are self explanatory.

Blueprint code logic which include Turn, Teleportation, Pose Regognization, Drawing on board and Sound logic.

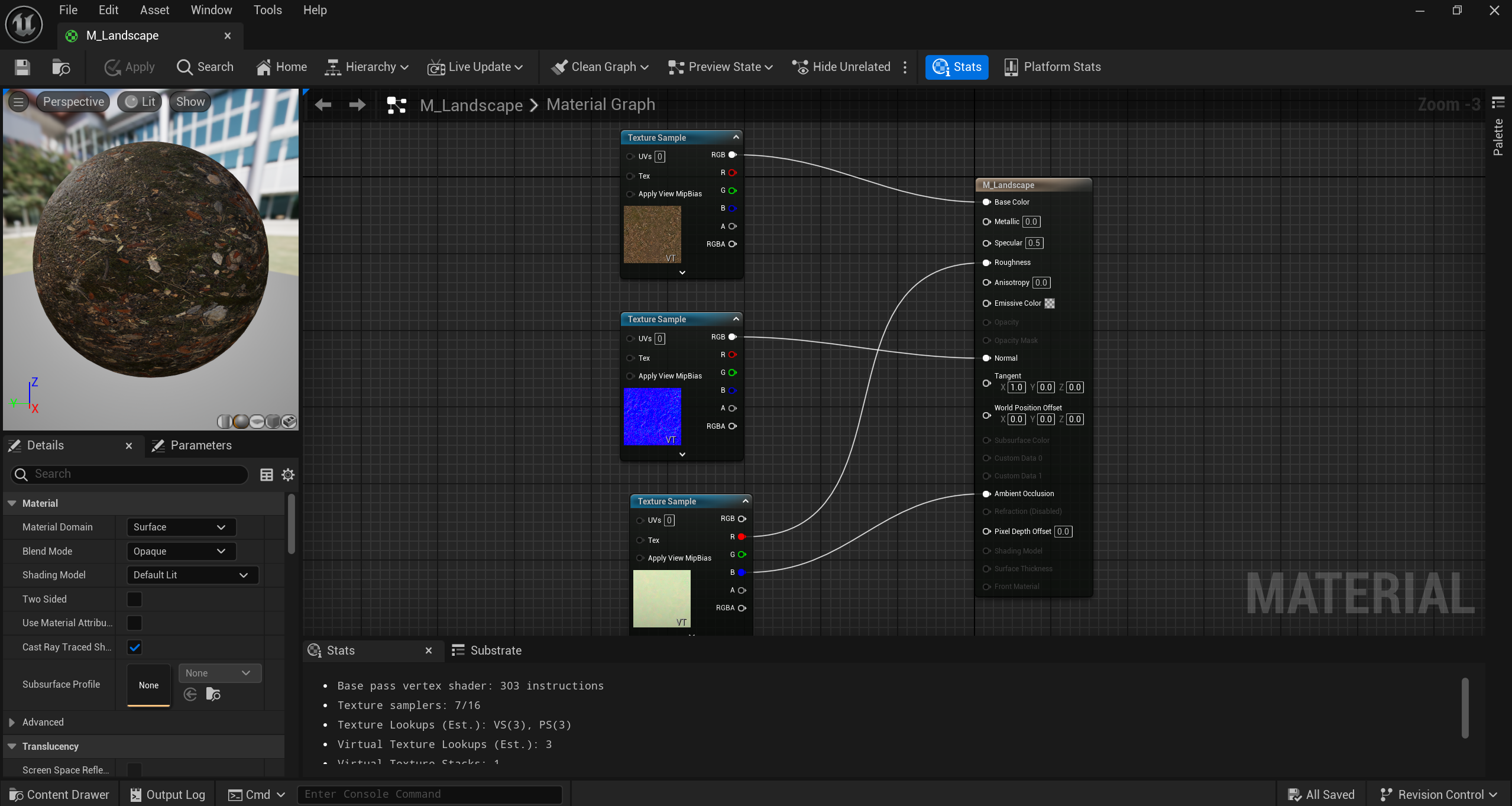

Material Design Logic for floor:

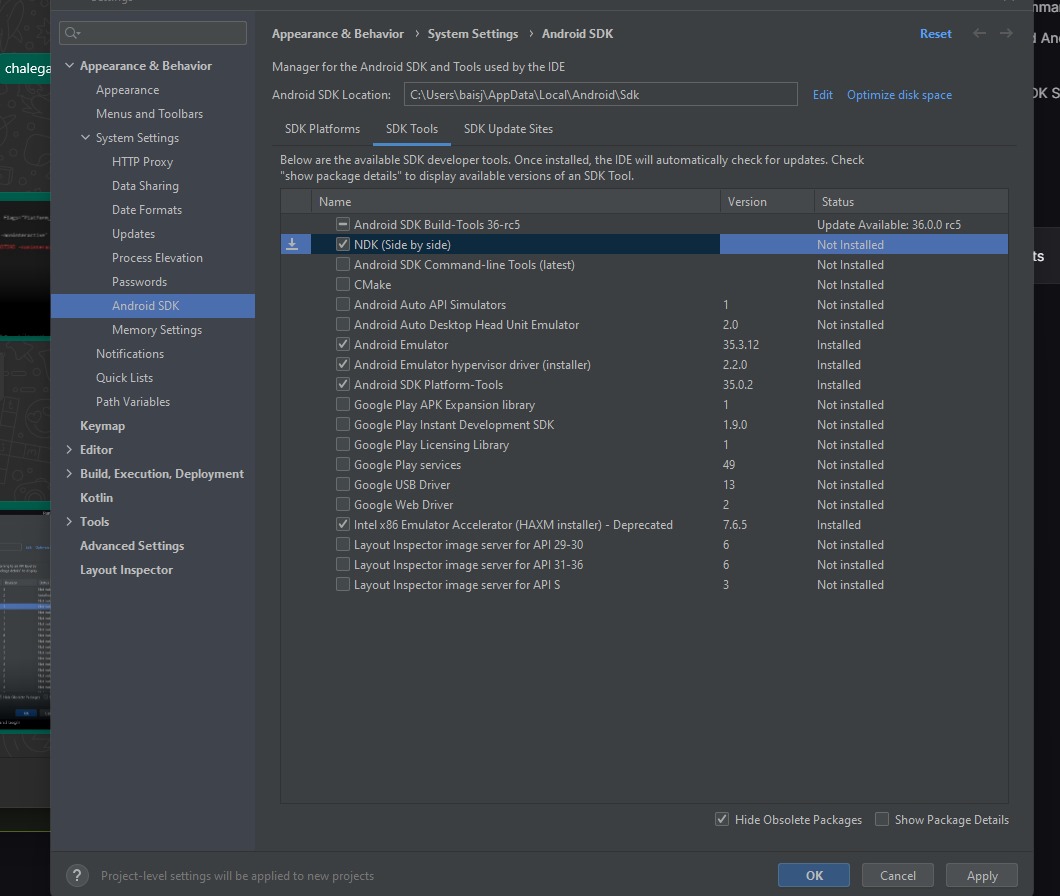

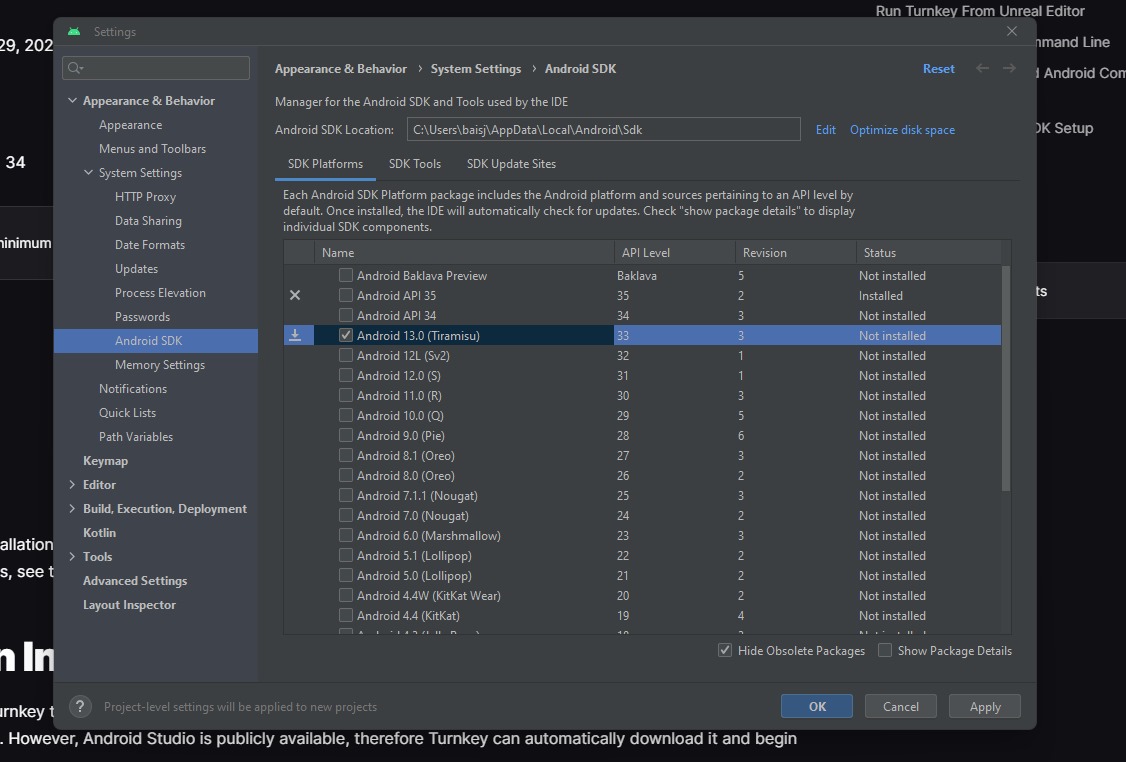

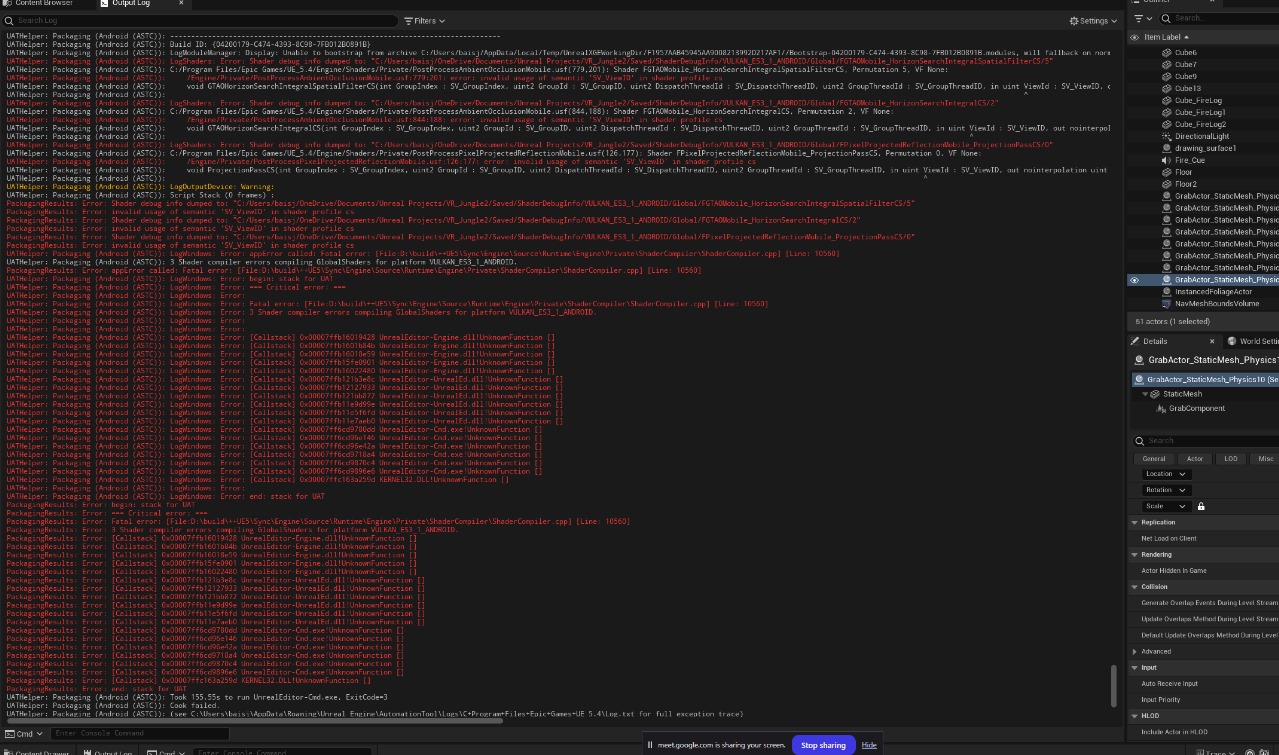

Development Stage: Setup for APK Creation

To install the app on the Meta Quest 3 standalone VR headset, we need to create an APK file. This is the setup for creating the APK file and error screenshot.

User Testing Stage:

User testing was conducted with few participants, who were asked to complete the entire app experience. The feedback was overwhelmingly positive, with users appreciating the immersive environment and the AI mentor's guidance. The app was well-received, and users expressed a desire for more features and interactions in future versions.

Project Timeline:

This is the timeline of the project where we have to complete the project within 3 months.

Conclusion:

"Inner Path" demonstrates an innovative application of VR for accessible and engaging therapy. Through professional consultation, robust design, and detailed user interaction mechanics, our project successfully bridges the gap between clinical therapy and modern technology. By creating an immersive, soothing environment, we provide users with a supportive first step toward managing their mental health.

Reflection of My Work:

There were several significant challenges throughout this project. The first major hurdle was setting up the forest environment. Although we used 3D assets for the forest scene, creating a believable and immersive world on a standalone Meta Quest platform was extremely complex. The hardware limitations of mobile-based CPU and GPU meant that we could not use high-density assets or real-time lighting typically available in PC VR development.

To overcome this, we manually placed over 500+ individual 3D meshes to form a natural-looking forest. Each asset had to be optimized: we removed Nanite meshes, adjusted materials to be mobile-compatible (limiting the use of multilayer shaders), and baked all lighting individually because real-time dynamic lighting was not feasible. Each time we made even a small adjustment, it required hours of light baking and scene rebuilding, which made the iteration process very slow and demanding.

The second major challenge was removing reliance on physical controllers and achieving full hand-tracking functionality. Designing the app to be completely hand-tracked — including navigation, interaction, and communication with the AI mentor — required creating intuitive and reliable custom gestures. Hand-tracking on mobile VR still has limitations in accuracy and responsiveness, so fine-tuning the gestures to feel natural while maintaining system stability took a lot of effort, iteration, and testing.

Despite these challenges, we remained determined and adaptable. Through careful optimization, collaboration, and persistence, we were able to deliver a smooth, immersive experience that runs effectively on a standalone VR headset.